Serverless Eventing: Modernizing Legacy Streaming with Kafka

Jason Smith

Jason Smith

May 1, 2020

Knative Eventing offers a variety of EventSources to use for building a serverless eventing platform. In my previous blog post I talk about SinkBinding and we use this concept to create an EventSource the pulls Twitter data.

What happens when you have a legacy system though? It often doesn’t make practical sense to throw out a functioning system just to replace it with the “latest and greatest”. Also, software solutions rarely fit a “one size fits all” approach. You may also find yourself in a “hybrid” or “brownfield” situation.

For example, perhaps you have a legacy messaging bus that is not just used for your serverless application but also used for IoT devices. This is a perfect example of when you will have a “hybrid environment”. Dismantling a working system just to improve the backend makes no sense. We can still modernize our applications with serverless eventing.

I am a fan of Apache Kafka. Admittedly, I didn’t know much about Kafka prior to my time at Google. Through my interactions, I got to know the people at Confluent and they taught me about the ins and outs of Kafka. Scalyr has an amazing blog post that goes into the benefits of Kafka. Confluent also has some information here on Enterprise use-cases for Kafka.

My TL;DR is that Kafka is an open source stream-processing platform with high through-put, low latency, and reliable sends. This is ideal for people who want to ensure that their messages are reliably sent in the order that they were received and in real time. That’s probably why a significant number of Fortune 100 companies use them.

In this demo, we will use Confluent Cloud Operator. There are many other such as Strimzi but I chose the Confluent Operator for a few reasons.

- It is a bit more mature. Strimzi is still seen as a CNCF Sandbox Project while the Confluent Operator is currently used in production developments.

- Larger Community

- Comes with some interesting tools like Control Center

- I have been playing with it for longer

So let’s check out my tutorial here. I will wait for you to complete

Do Lab

Come Back

That was fun wasn’t it? We basically created an application that would go pull currency exchange information and send it to a Kafka Producer. While this simple example doesn’t seem like much, imagine having 100 microservices that all conduct their own processing with currency and they need to send them to Kafka. Would it make sense to have developers hard code connectors into each microservice, giving the Kafka administrator many points of failure to diagnose? How about supporting multiple libraries as some services are written in NodeJS, some in Go, and some in Python?

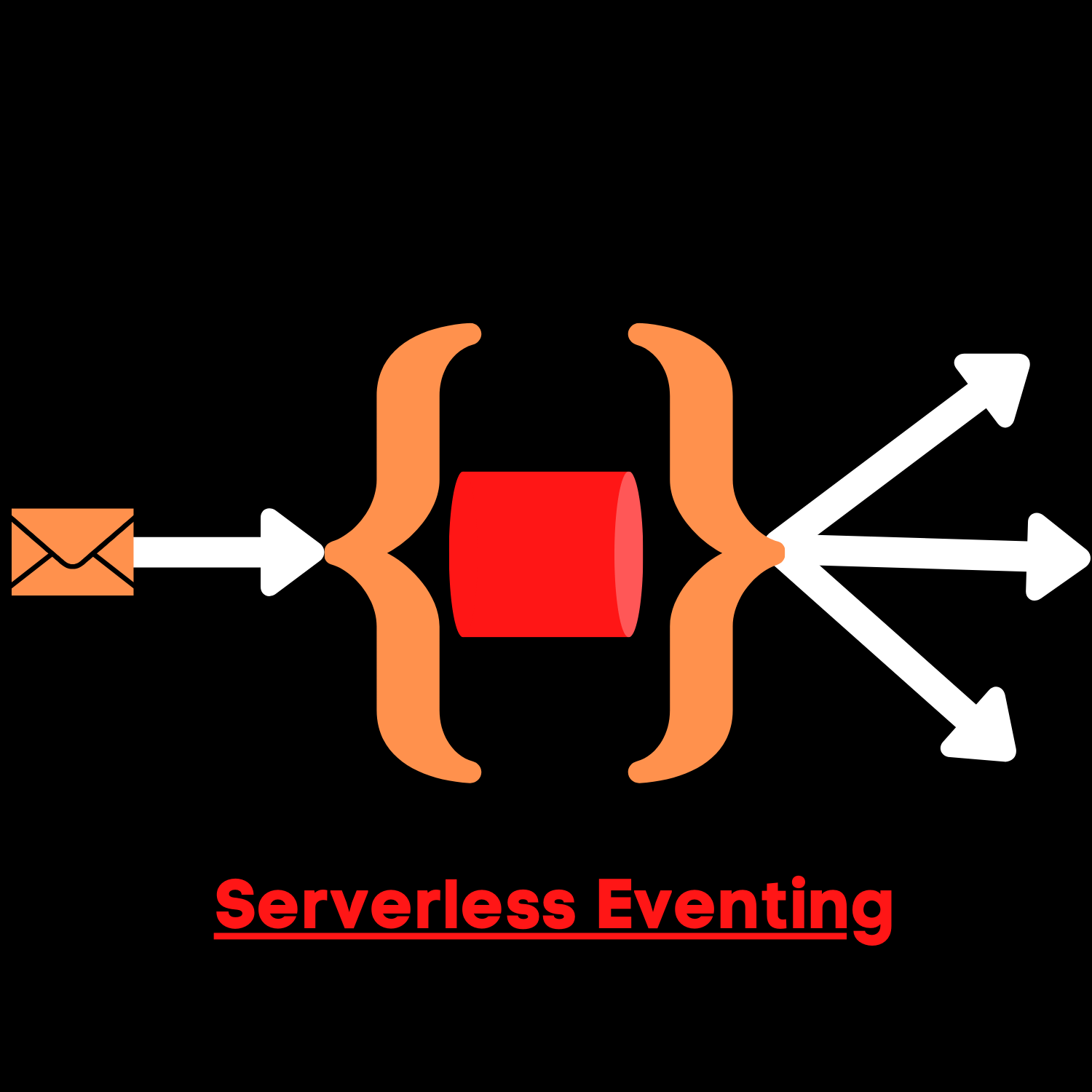

You create a service to simply egress the data outside of the container and let the SinkBinding determine where those messages should go.Then you can connect to an event sink which can be one service or a multitude of services using Channels, Brokers, and Triggers. You conceivably have one code base to handle the Kakfa Event Production while supporting multiple microservices.

Next time, we will learn how to consume with Serverless Eventing.