Serverless Eventing - Eventing Diagram

Jason Smith

Jason Smith

January 22, 2021

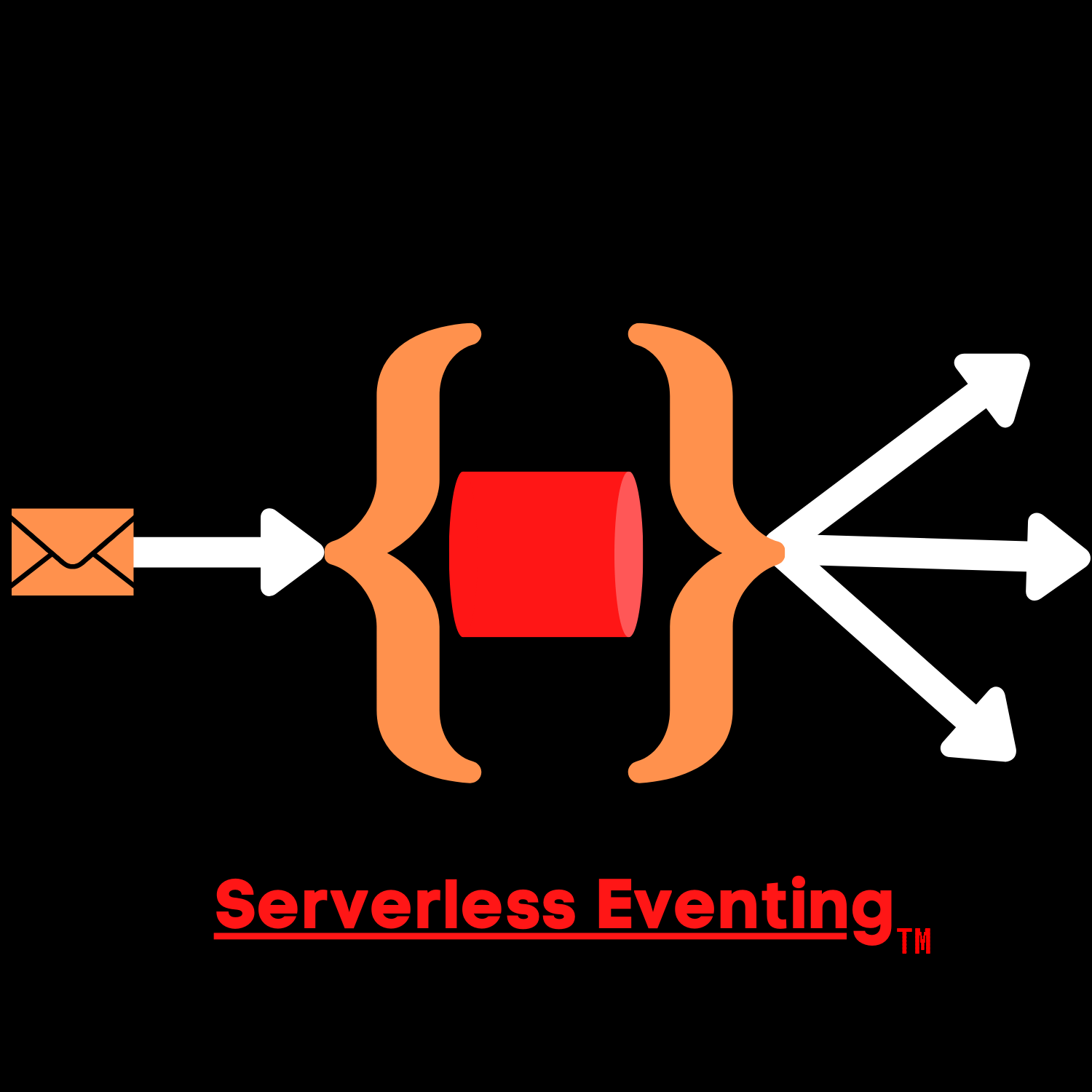

As I write more about about Serverless Eventing, some people has asked me what it actually looks like. Admittedly, I tend to be more verbal than visual as graphics have never been my strong suit. So in this post, I decided to create a diagram to attempt to explain not just WHAT Serverless Eventing is but WHY it matters.

THE LEGACY WAY

So let’s say that you are a backend developer for a mobile application. You plan on having millions of users which means millions upon millions of events. You set a Kafka Cluster to handle the events. The mobile application is sending multiple messages to a given set of Kafka topics. This is a pretty simple and somewhat standard setup.

Your application hits various endpoints hosted on a Functions as a Service (FaaS) platform. These functions then write the message to a given topic. Typically, these functions will have to import some kind of library to make the writing easier. Then of courser, you need to imperatively bind to topic. This means using what we’ll call a ‘driver" to tell your code what topic to write too, the bootstrap server, and then how to authenticate.

Then of course, there is the receiving/consuming end. This would be your backend service that subscribes to that topic to pull data. You will have a similar experience where you are writing a sort of ‘driver’ to connect to the proper topic and consume the messages. This would all look similar to this:

Your function (producer) is using a driver to write to your Kafka Topic (Messaging Bus). Your backend service (consumer) must also connect using a driver to consume the event.

Now keep in mind, this is one topic, one consumer, and one producer. Writing connecting drivers isn’t too big of a deal. But what happens when you scale to hundreds if not thousands of services, topics or brokers? Imagine trying to manage all of those various connections and the credentials. This is where Serverless Eventing comes in.

THE SERVERLESS EVENTING WAY

With Serverless Eventing, you aren’t necessarily replacing your Messaging Bus. If anything, you are just making it easier. Remember how I defined serverless technology back in this post? A quick summary is that a serverless app needs to be stateless, scalable, and code-centric (no infra). The end goal is to make serverless applications simple for your developers. Developers care about code, not brokers, servers, networks, etc.

What if we can take the same services and the same messaging bus but add an abstraction layer? That layer will contain the “drivers” the connect to the proper broker, topic, etc. Then our abstraction layer (Eventing Bus) will listen for traffic coming over a simple HTTP request and then determine where it needs to go.

In terms of ingesting data, this same Eventing Bus will utilize triggers to send a message to the proper service. Rather than hard coding a subscription to our services, we can create a subscription object that will collect the message and then egress it to the proper service as an HTTP object. Simply create a route/endpoint like you would for any REST API and your service will ingress the data.

A good visual representation can be seen below:

In this example, you may have an outside service. This would be a service that exists outside of your Kubernetes cluster or even outside of your data center. It publisher an event to the messaging bus’s topic.

With Knative Eventing, you can create an Eventing Broker that effectively subscribes to the topic(s) in the message bus. Notice how this broker can also consume other events if you choose to do so.

You will create a trigger which will collect and route your messages based on X criteria and route it to a service via a subscription (represented by the yellow triangle).

This relationship can be represented as a Kubernetes object, allowing you to declare it with simple YAML like what you see below.

apiVersion: eventing.knative.dev/v1

kind: Trigger

metadata:

name: my-service-trigger

spec:

broker: default

subscriber:

ref:

apiVersion: v1

kind: Service

name: my-service

uri: /my-custom-path

LET’S MAKE IT EASIER

The net-net here is that Serverless Eventing helps decouple events from their sources. You get an additional layer that allows you to declaratively bind events from source to sink. You can offload the management of brokers to your Data Management team while the developers can simply focus on writing applications that do your standard egress and ingress.

In a later post, we’ll dive a bit deeper on how to do this at scale and bind with a CLI tool.